SOMATIC DESIGN PROJECT 1 (SDP1) OVERVIEW

The Challenge 📌

This “critical making” project focuses on developing and building a multi-sensory tool that will engage a specific user group’s multiple somatic senses through their interactions with media technologies.

Our Design Solution 🔑

Our team created a tactile speaker that works to enhance the musical experiences of users with hearing and visual related disabilities by engaging four main somatic senses; sound, sight, touch, and motion.

Role

Research Ideating Prototyping

Toolkit

Figma Physical Materials

Duration

2 months

RESEARCH 🗒️

FINDINGS 🤳🏽

Visually impaired users struggle to find and adjust volume controls without trial and error or the need for assistance.

Exploring Further

Our team chose to design a solution targeting the visually and hearing impaired. We thought of a potential idea related to a tactile speaker, however, we first needed to gain a deeper understanding of our users struggles, and how to implement certain engagement of somatic senses within the speaker’s design.

The following is the secondary research we found:

A large percentage of the legally blind are still able to perceive light as they carry some remaining vision.

A tactile speaker engaging the our users senses of touch, sight, motion, and hearing. The following is what we plan to implement:

IDEATION🧠

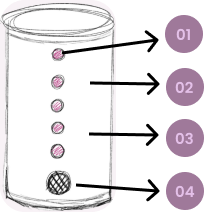

With further ideation of the features we wanted to add in our prototype, here is a sketch we made below that would include the following 4 main components:

Objectives

The following components are what we aimed to take into consideration throughout our entire process.

By incorporating materials that are sustainable, recyclable and durable, to ensure it lasts through consistent use

For our visually and hearing impaired users while catering towards other considerations that these user groups may face like sensitivity to light.

Our Idea

LOW-FIDELITY PROTOTYPE 🖊️

Further Conception

We used the following materials to build our prototype:

There were five steps in our production plans:

Course Project Mobile Application Physical Prototype

1)

Located a suitable plastic cup as the base and structure for the speaker; durable and reusable.

2)

We created outlines using a pen for where the LEDs and sensor would poke out from & created cuts using those outlines with scissors.

3)

After we coded the LED lights and sensors, with Ardunio Uno, we then inserted it into the cup and the cuts we made earlier.

4)

We used the lid that came with the cup to hold the all the components inside (Ardunio Uno, LED Lights, wires, etc.).

5)

For decoration and to ensure the Arduino and wires were covered from the outside, we taped paper around the cylinder structure.

PROTOTYPING 🛠️

Production Methods

SIGHT

MOTION

SOUND

Nida Shanar Ryu Chen Vanessa Lin Megan McMullen

Hearing impaired users can sense vibrations through the contact of their skin in the same part of the brain that allows the hearing-abled to use for hearing.

The exterior of the speaker will implement vibrations that omit patterns according to the song playing so that users can "feel" the music when touching its structure.

Motion sensors placed within the interior that can react to gestures made close to the speaker that can increase or decrease the music's volume; providing accessibility.

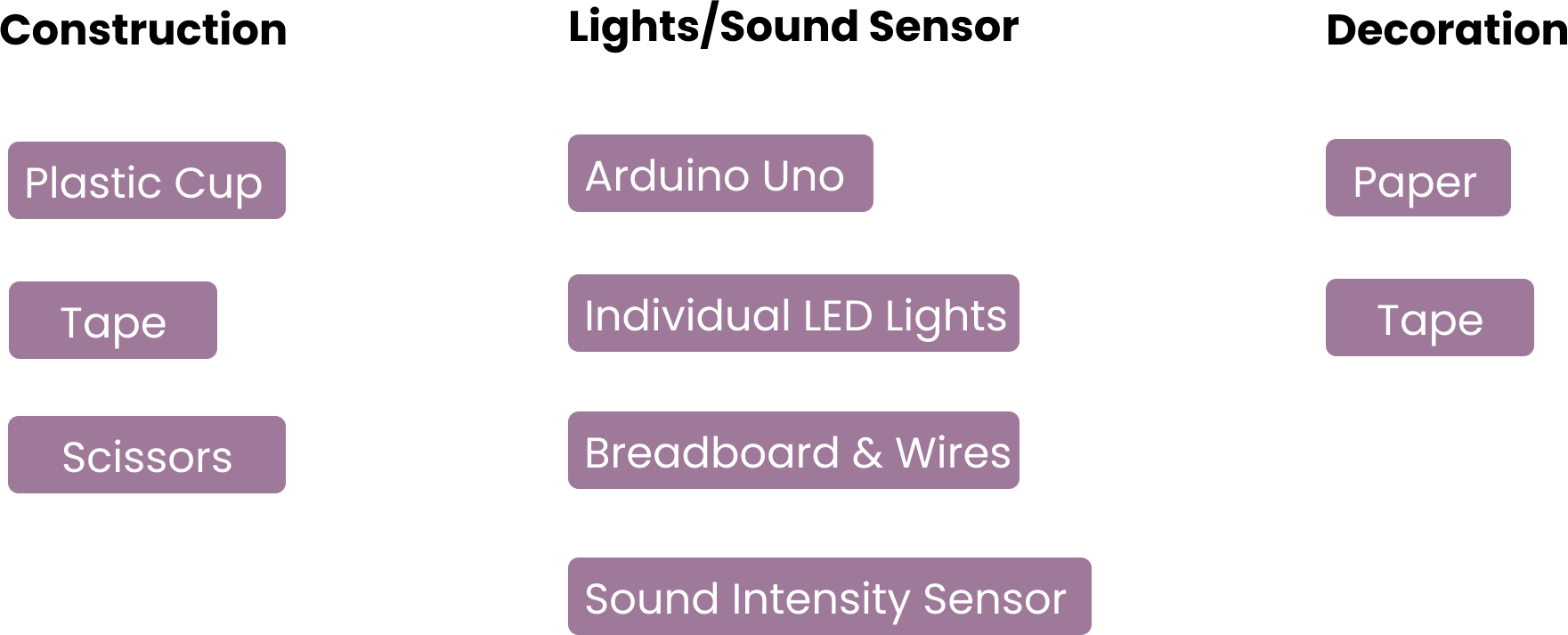

Here is the final prototype we built:

Team

The speaker functioning when playing music into the sound intensity sensor.

For SDP2, our team conceptualized our SDP1 idea beyond the exterior but rather expanded on it....

HIGH-FIDELITY PROTOTYPE 🖤

The Final Product

The front of the speaker where the LED lights and intensity sensor poke out.

The bottom of the speaker where the components are held inside.

SOMATIC DESIGN PROJECT 2 (SDP2) OVERVIEW

The Premise 📌

This maker project focuses on students producing designs that connect to the internet of things via electronic components while engaging multiple somatic experiences for specific users.

Our Design Solution 🔑

We continued with our SDP1 design but catered towards the SDP2 premise; we designed a QR code placed on the tactile speaker for users to scan with their smartphones and connect them to the internet where they will access a webpage application displaying their music statistics and a set of controls.

Duration

IDEATION & LOW-FIDELITY PROTOTYPE 💡

Project Type

Team

To ensure that the design for each feature and its somatic senses, operate efficiently and how we planned for it to work.

1 month

A tactile speaker that engages the senses of touch, sight, motion, and hearing through its connection to the internet and use of electronic components.

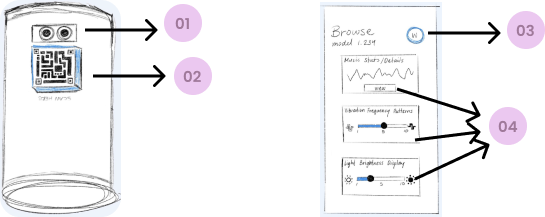

The following is our low-fidelity prototype displaying what we plan to implement:

PHYSICAL PROTOTYPE

MOBILE APPLICATION

SOUND/MOTION

TOUCH

Our Idea

TOUCH

LED bulbs that light up to the song's music beat, while it incorporating a light diffusing shade to diffuse its brightness; both working to illuminate our user's experience's visually.

A sound intensity sensor to simulate where the music would be playing from according to the music users choose to play.

SOUND

TOUCH

The exterior of the QR code will have a 3D border to help users feel where its located once touching the structure.

A digital assistant tool called "Wiress", to help visually impaired users to navigate through the webpage application.

When users motion their smartphone device around the speaker's structure, it will emit a buzzing sound to tell the user they have successfully located the QR code to scan.

Vibration frequency pattern controls and LED light brightness display controls; users can scroll left for a lower level and right for a higher level with their fingers. They can also view their music stats and other details.

We used the following materials to build our prototype:

PROTOTYPING 🛠️

Production Methods

There were five steps in our production plans:

1)

We began by making a Figma file where we would later make the webpage components in to make it interactive.

2)

We then took the file link and pasted it into a QR code generator tool.

3)

We printed that same QR code onto paper, cut it out and taped it on a piece of cardboard to ensure it stayed flat to scan because when we initially taped it onto our the curved prototype speaker's body, it wasn't scanning.

4)

We then made 3D cardboard border pieces to tape on the sides of the qr code so that its elevated enough for visually impaired users to use their sense of touch to feel where its located.

5)

Finally, we plugged and coded our ultrasonic sensor and buzzer into Arduino Uno as the ultrasonic sensor controls the buzzer component.

Here is the final prototype we built:

Physical QR Code Components

HIGH-FIDELITY PROTOTYPE 🖤

The Final Product

The back of the speaker where the 3D QR code is located.

The side view of the speaker where you can notice the buzzer.

Webpage Application

The music statistics page displaying the following info.

The songs played page where users can see their most recent song played.

The beginning screen once users scan the QR code and access the app.

The browse page for users to customize their speaker's controls or view music statistics.